How we won 2nd at Mistral's hackathon

March 28, 2024

Last weekend, Mistral held their inaugural SF hackathon. We ended up winning 2nd place and more importantly, we actually got a good night's sleep.

The focus of the hackathon was to use Mistral's new models and API to build something cool. At the start, they also announced a new 7B model with a 32k context window. There were two tracks: an API one and a fine-tuning one. Working on Village's data infrastructure gave us a good deal of experience with fine-tuning, so we decided to go with that track.

We've tried a few hackathons so far, but our approach has always been chaotic: go in without an idea and frantically brainstorm one in the first few hours. Sometimes it works, but it usually ends up taking a lot more time and stress than expected. So this time, we brainstormed the night before to come up with a few ideas. We ended up going with a project that we've been thinking about for a while.

We've both been reading ML papers to catch up on the latest research, but the sheer volume of papers can be overwhelming. Lots of papers are simply poorly written. Even if you're familiar with a field, low quality prose and inconsistent notation makes understanding a paper hard. And if you're learning a concept for the first time, then you'll probably end up watching a YouTube video or a Medium article explaining the paper instead.

One alternative is organized stores of knowledge, like Wikipedia. These are written in a way that's easy to understand, but they're not always up-to-date, especially if you're really interested in the cutting edge. What if we could automatically generate Wikipedia-style articles on ML papers? Modern LLMs are excellent at retrieving, summarizing, and organizing information, properties that seem perfect for this task.

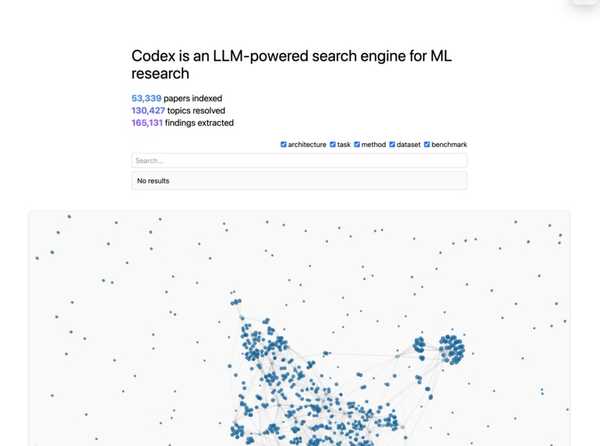

To see if this kind of task was possible, we built Codex, a site that lets you browse ML concepts and generate useful articles on the fly. We already have a fair amount of experience building big knowledge graphs at Village, so we decided to apply a similar set of principles to this problem. In short, we run the following pipeline:

- Collect a bunch of papers from arXiv, around a couple hundred thousand.

- Use Mistral's largest model to extract key insights, datasets, and results from a few papers.

- Use the examples to fine-tune a smaller model to perform the same task.

- Deploy the smaller model to extract structured outputs from the rest of the papers.

After running these steps, we get a very rich knowledge graph of concepts and findings from the papers. We index all of our concepts with Vespa, which lets us search for concepts. When you click on a page for a concept, we load relevant connected findings and use that to generate a Wikipedia-style page from scratch.

If you'd like to check out our code, it's on GitHub.